Learn how to set up a robust, end-to-end encrypted backup strategy for your data using Rclone. This tutorial ensures your valuable information remains secure and your hard work is preserved by leveraging the power of cloud storage and encryption.

Introduction

We all know how important it is to protect our data, whether for personal projects, business documents, or, in this example, your blog. Imagine losing all that precious content due to a server failure, hacking attempt, or an accidental deletion. Yikes! That’s why having a solid backup strategy is a must. I’ll show you how to set up end-to-end encrypted backups using rclone in this guide. Let’s dive in and keep your data safe and sound!

Objectives

By the end of this guide, you’ll be able to:

- Understand the importance of backing up your data.

- Install and configure Rclone for encrypted backups.

- Enable API access and create OAuth credentials for your cloud storage provider (Google Drive).

- Automate the backup process with a script and cron jobs.

- Implement a retention policy to manage storage effectively.

- Verify and monitor your backups regularly.

1. Choose Your Backup Solution

First things first, let’s pick a reliable backup tool. Rclone is fantastic because it supports various cloud storage providers like Google Drive, Dropbox, OneDrive, and more. Plus, it offers top-notch encryption to keep your data secure whether on the move or in the cloud.

2. Installing Rclone

Time to get Rclone up and running. Download it from the official website or use package managers like apt for Linux or brew for macOS.

sudo -v ; curl https://rclone.org/install.sh | sudo bashVerify the installation to make sure it’s all set:

rclone --versionrclone --version

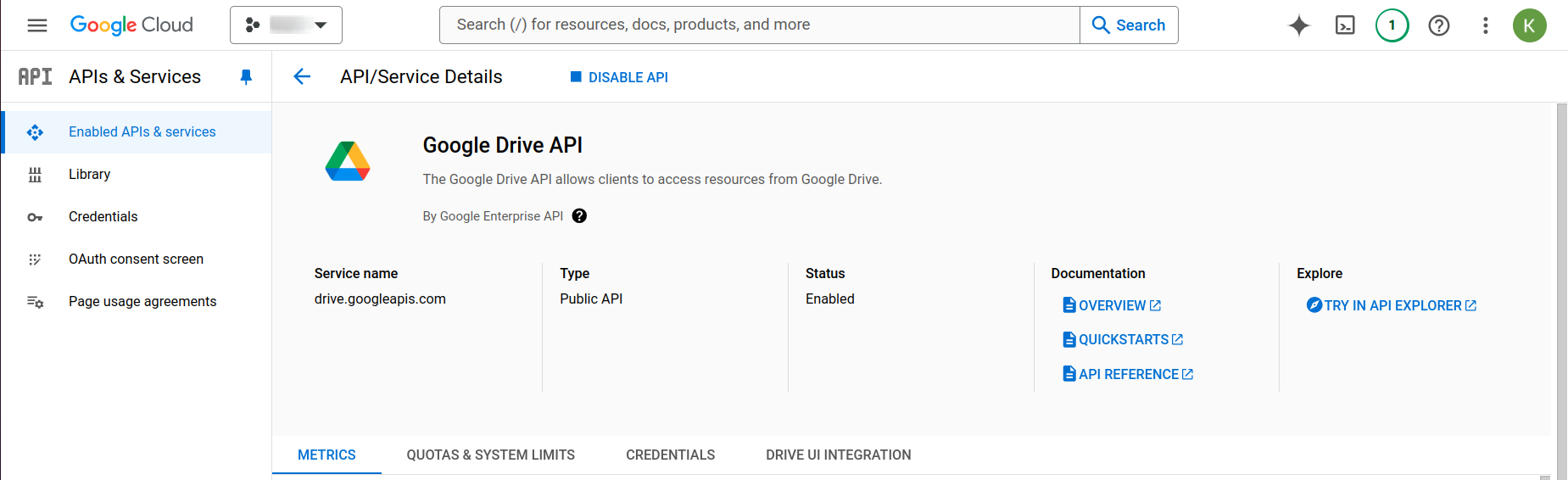

3. Enable API Access

Next, we need to enable API access for your cloud storage provider. We’ll use Google Drive as an example but feel free to adapt this for your preferred service.

Enable Google Drive API

- Head over to the Google Cloud Console.

- Create a new project or select an existing one.

- Navigate to APIs & Services > Library.

- Search for “Google Drive API” and enable it.

4. Create OAuth Credentials

To securely access Google Drive (or another service), you need to create OAuth credentials. Don’t worry, it’s not as tricky as it sounds!

OAuth 2.0 is the de facto industry standard for online authorization, enabling applications like social media integrations (e.g., allowing a third-party app to post on your behalf on Facebook) without sharing your login credentials. This ensures secure access to your data while maintaining privacy and control over how it’s used. While you can use the default Rclone credentials, it comes with limits.

Configure OAuth Consent Screen

- Navigate to the Google Cloud Console and click on “Manage” for the Google Drive API.

- Go to APIs & Services > OAuth consent screen.

- Choose External or Internal based on your needs and click Create. In this case, select External.

- Fill out the App Information (e.g., app name, user support email).

- Under Scopes, add the following scopes:

-

https://www.googleapis.com/auth/docshttps://www.googleapis.com/auth/drivehttps://www.googleapis.com/auth/drive.metadata.readonly

- Save and continue through the remaining setup steps.

- Add your own account to the test users and publish your app.

Generating Client ID and Secret

- Go to the Google Cloud Console.

- Navigate to APIs & Services > Credentials > Create Credentials > OAuth client ID.

- Select “Desktop app” as the application type.

- Note down the generated Client ID and Client Secret for Rclone configuration. You can also download the client secret JSON file.

5. Configure Rclone

Now that we’ve got the OAuth credentials, let’s configure Rclone to use them.

Note that OAuth requires a web browser for authorization. If you are using a headless machine, there are several ways of going about this. You can use SSH tunnel to redirect the headless box port 53682 to the local machine thereby enabling browser access.

ssh -L localhost:53682:localhost:53682 username@remote_serverFire up your terminal and start the Rclone configuration:

rclone configConfiguration

Follow the prompts:

- Type

nto create a new remote. - Name the remote (e.g.,

gdrive). - Select the cloud storage provider (e.g.,

17for Google Drive). - Enter the

client_idandclient_secretfrom your downloaded JSON file. - Leave the rest of the fields as default unless you have specific requirements.

- Open the provided URL in your browser to authorize Rclone and get the token.

6. Set Up Encryption

Encrypting your backups ensures that even if someone gains access to your cloud storage, they can’t read your data without the encryption key. Let’s make sure your data stays safe.

Start Rclone configuration:

rclone configConfigure Encrypted Remote

Follow the prompts to add encryption:

- Type

nto create a new remote. - Name the remote (e.g.,

encrypted-gdrive`). - Select

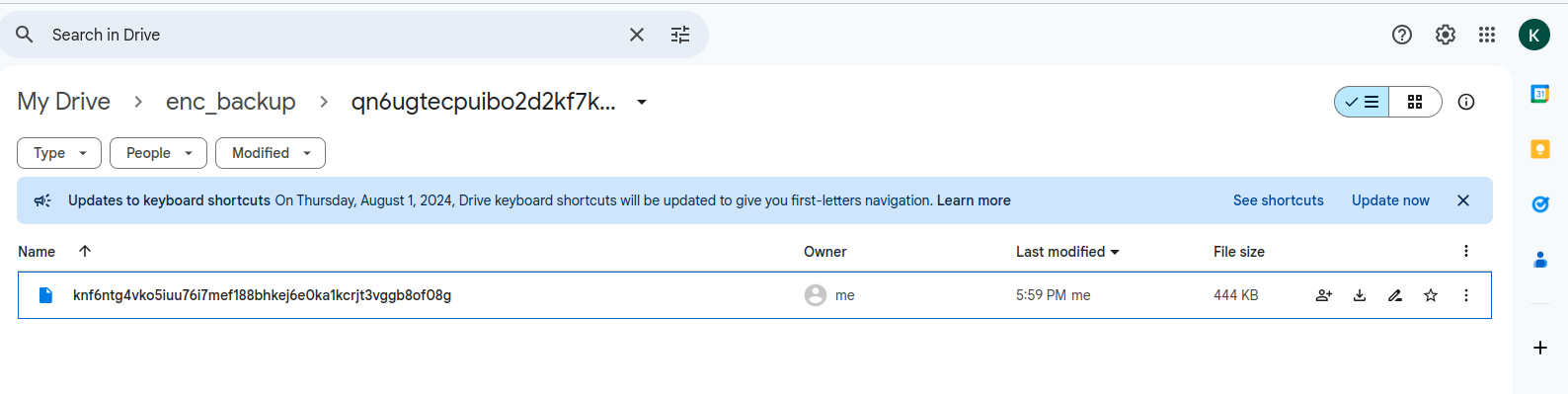

cryptfor the storage type. - Choose the previously configured remote as the remote to encrypt and set the path where the encrypted data will be stored (e.g.,

gdrive:enc_backup). Ensure the path exists on Google Drive. - Choose a password and a salt for encryption. Make sure to store these securely.

7. Writing Your Backup Script

Creating a backup script is essential to automate and manage your backup process. Here’s an example of a shell script (backup.sh) that not only performs the backup but also implements a retention policy to manage storage effectively by retaining a specific number of recent backups and deleting older ones.

#!/bin/bash

# Define variables

DB_NAME="blog_prod"

GHOST_CONTENT_DIR="/var/www/blog/content"

BACKUP_DIR="/home/keelan/backup"

REMOTE_NAME="encrypted-gdrive"

REMOTE_PATH="data-backups"

TIMESTAMP=$(date +"%Y%m%d_%H%M%S")

MYSQL_DUMP="$BACKUP_DIR/ghost_prod-$TIMESTAMP.sql.gz"

TARBALL="$BACKUP_DIR/content-$TIMESTAMP.tar.gz"

COMBINED_TARBALL="$BACKUP_DIR/backup-$TIMESTAMP.tar.gz"

LOG_DIR="/home/keelan/log"

LOG_FILE="$LOG_DIR/backup_script.log"

MAX_BACKUPS=20

# Function to log messages

log_message() {

echo "$(date +"%Y-%m-%d %H:%M:%S") : $1" >> "$LOG_FILE"

}

# Function to run a command and log it

run_command() {

local cmd="$1"

log_message "$cmd"

eval "$cmd"

local status=$?

if [ $status -ne 0 ]; then

log_message "Error: Command failed with status $status"

exit $status

fi

}

# Create backup directory if it doesn't exist

mkdir -p "$BACKUP_DIR"

# Create log directory if it doesn't exist

mkdir -p "$LOG_DIR"

# Dump MySQL database and compress it

run_command "mysqldump $DB_NAME --no-tablespaces | gzip > $MYSQL_DUMP"

log_message "Database backup created and compressed successfully."

# Create tarball of Ghost content directory

run_command "tar -zcvf $TARBALL -C $GHOST_CONTENT_DIR ."

log_message "Ghost content tarball created successfully."

# Combine MySQL dump and Ghost content into one tarball

run_command "tar -zcvf $COMBINED_TARBALL -C $BACKUP_DIR $(basename $TARBALL) $(basename $MYSQL_DUMP)"

log_message "Combined tarball created successfully."

# Copy tarball to Google Drive

run_command "rclone copy $COMBINED_TARBALL $REMOTE_NAME:$REMOTE_PATH"

log_message "Backup successfully copied to Google Drive."

# Clean up local files

run_command "rm -f $MYSQL_DUMP $TARBALL $COMBINED_TARBALL"

# Clean up old backups

backup_list=$(rclone ls "$REMOTE_NAME":"$REMOTE_PATH" | sort -r | awk '{print $2}')

backup_count=$(echo "$backup_list" | wc -l)

if [ $backup_count -gt $MAX_BACKUPS ]; then

echo "$backup_list" | tail -n +$(($MAX_BACKUPS + 1)) | while read -r backup; do

run_command "rclone deletefile $REMOTE_NAME:$REMOTE_PATH/$backup"

log_message "Deleted old backup: $backup"

done

fi

# Log backup activity

log_message "Backup process completed successfully."

echo "Backup process completed successfully."

Make the script executable:sudo chmod +x backup.sh

Creating a dedicated MySQL user for backups ensures that your database access is secure and limited to only the permissions necessary for performing backups.

mysql> CREATE USER 'backup'@'localhost' IDENTIFIED BY 'backuppassword';

mysql> GRANT ALL ON blog_prod.* TO 'backup'@'localhost';

mysql> FLUSH PRIVILEGES;Creating a ~/.my.cnf file simplifies the backup process by storing the MySQL credentials securely. This file is read automatically by MySQL client tools, so you don’t need to enter the username and password every time you run a backup.

[client]

user=backup

password="backuppassword"Test the backup process:

./backup.shSure enough, it gets uploaded to Google Drive and is encrypted.

8. Scheduling Automated Backups

Schedule the backup script to run automatically at regular intervals (e.g., daily or weekly) using cron jobs on Linux:

crontab -e

Add the following line to schedule the script to run every Sunday at 1 AM and log the output:

0 1 * * 0 /home/keelan/backup.sh >> /home/keelan/log/cron.log 2>&19. Testing and Monitoring Your Backups

Verification

Periodically test your backup strategy by restoring backups to ensure they are complete and functional.

Monitoring

Monitor backup logs for any errors or warnings related to backup operations.

Conclusion

Your data is more than just files—it’s your hard work, creativity, and possibly your livelihood. Protect it with a reliable backup strategy using Rclone and your chosen cloud storage provider. By following this guide, you’ve equipped yourself with the tools and knowledge to safeguard your data against unexpected loss. Take charge of your data protection today and ensure continuity in your work and personal projects. With backups in place, you can move forward with confidence, knowing that your content is securely backed up and ready for whatever the future holds.

Blog Article by Keelan Cannoo, membre de cyberstorm.mu

Source: https://keelancannoo.com/implementing-end-to-end-encrypted-backups-with-rclone